HYDO velovision

2020 ~ 2022

What started as a self-directed exercise in computer vision and self-driving turned into a year-long endeavor to create the world’s first computer vision-based active cycling assistance system. In that time, I wrote a patent pending overtaking prediction program that achieves real-time performance on the low-power NVIDIA Jetson Nano module. I was able to design and assemble fully-working, 3D-printed hardware prototypes thanks to support from the South Korean government through the ‘K-Startup’ fund.

Sub-Projects

- Product website (hydo.ai): This fancy website has photos, videos, and even more information about HYDO. (Korean version)

- Velovision: See all of the code for HYDO right here!

- SeoulBike Dataset: A huge annotated object detection dataset of mainly pedestrians and cyclists in Seoul. A subset of the 300GB dataset will soon be made public for academic research. Velovision was trained from scratch on this dataset.

- Synthlabel: A modular synthetic dataset generator that supports retro-labeling (read blog post) and bounding box fusion (read blog post). This tool enabled me to efficiently generate accurate synthetic labels for deep learning without expensive hand labeling.

ClassySORT

2019

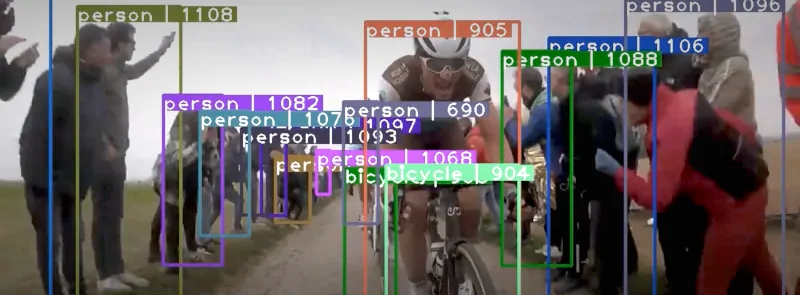

ClassySORT adds a kalman filtering-based object tracker (Simple Online Realtime Tracking1) on top of YOLOv5, a popular open source object detection model. This ready-to-use open source object tracker has enabled researchers to quickly prototype new applications in robotics and artificial intelligence. In this example, ClassySORT was used replicate a human-robot goal transfer study.2

Try it out and give me a ⭐ if you like it!

-

Bewley, A., Ge, Z., Ott, L., Ramos, F., & Upcroft, B. (2016). Simple online and realtime tracking. 2016 IEEE International Conference on Image Processing (ICIP). https://doi.org/10.1109/icip.2016.7533003 ↩︎

-

Ramirez-Amaro, K., Beetz, M., & Cheng, G. (2017). Transferring skills to humanoid robots by extracting semantic representations from observations of human activities. Artificial Intelligence, 247, 95–118. https://doi.org/10.1016/j.artint.2015.08.009 ↩︎